File Review System

Optimisation

Streamlining the compliance workflow for 240+ file reviewers, handling complex question sets and dynamic case logic.

The Scale of the Problem

The system had to support 1,300+ advisors and 4 teams of 8 reviewers. The existing process was buckling under the weight of massive, complex spreadsheets and insecure email chains. A single file review could contain up to 83 specific compliance questions, making manual tracking inefficient and unsafe.

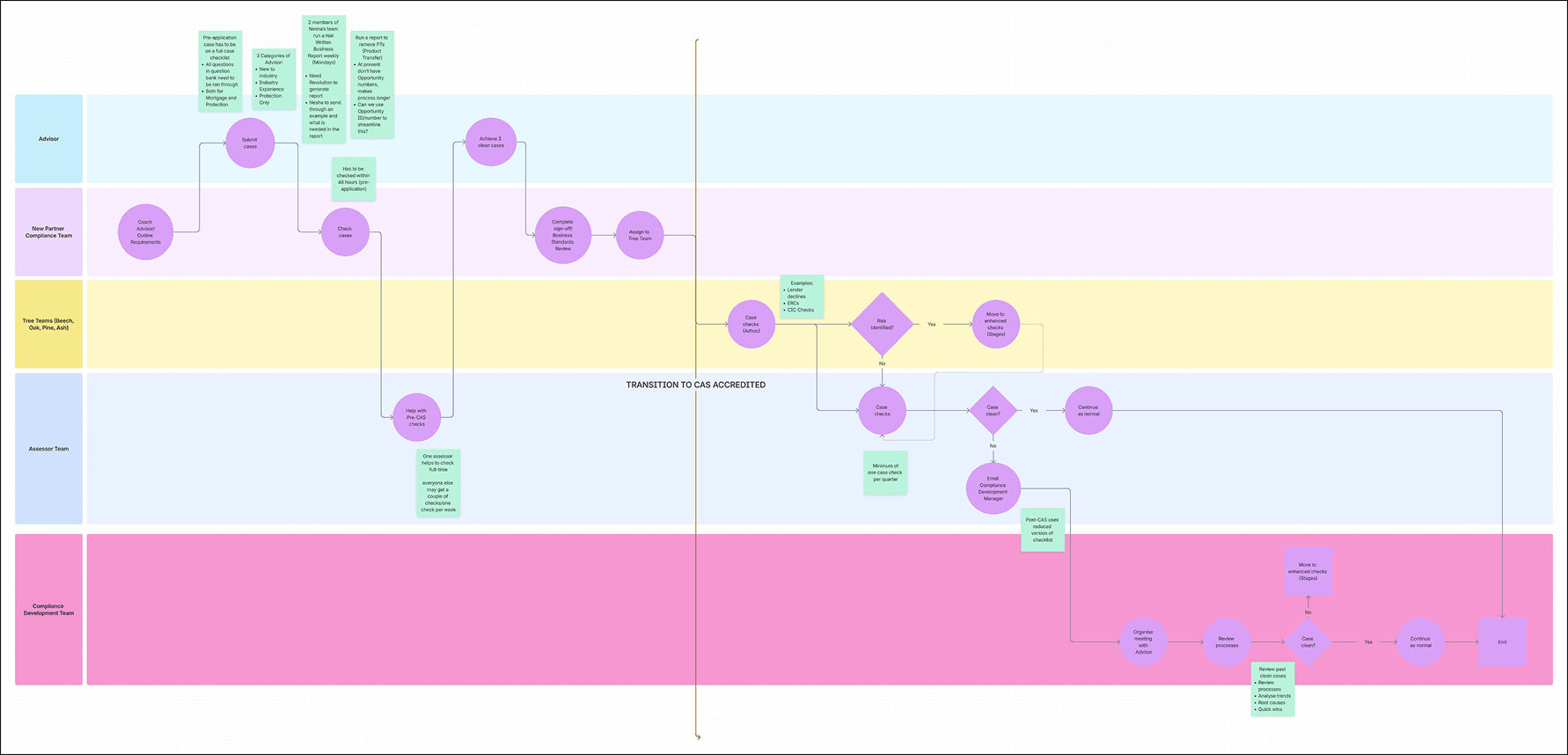

Visualising the Bottlenecks

To understand why the system was failing, we mapped the existing workflow. The diagram below reveals a rigid, linear pipeline trying to support massive volume. We discovered that "traffic jams" were occurring at every handoff point between the advisor and the reviewer.

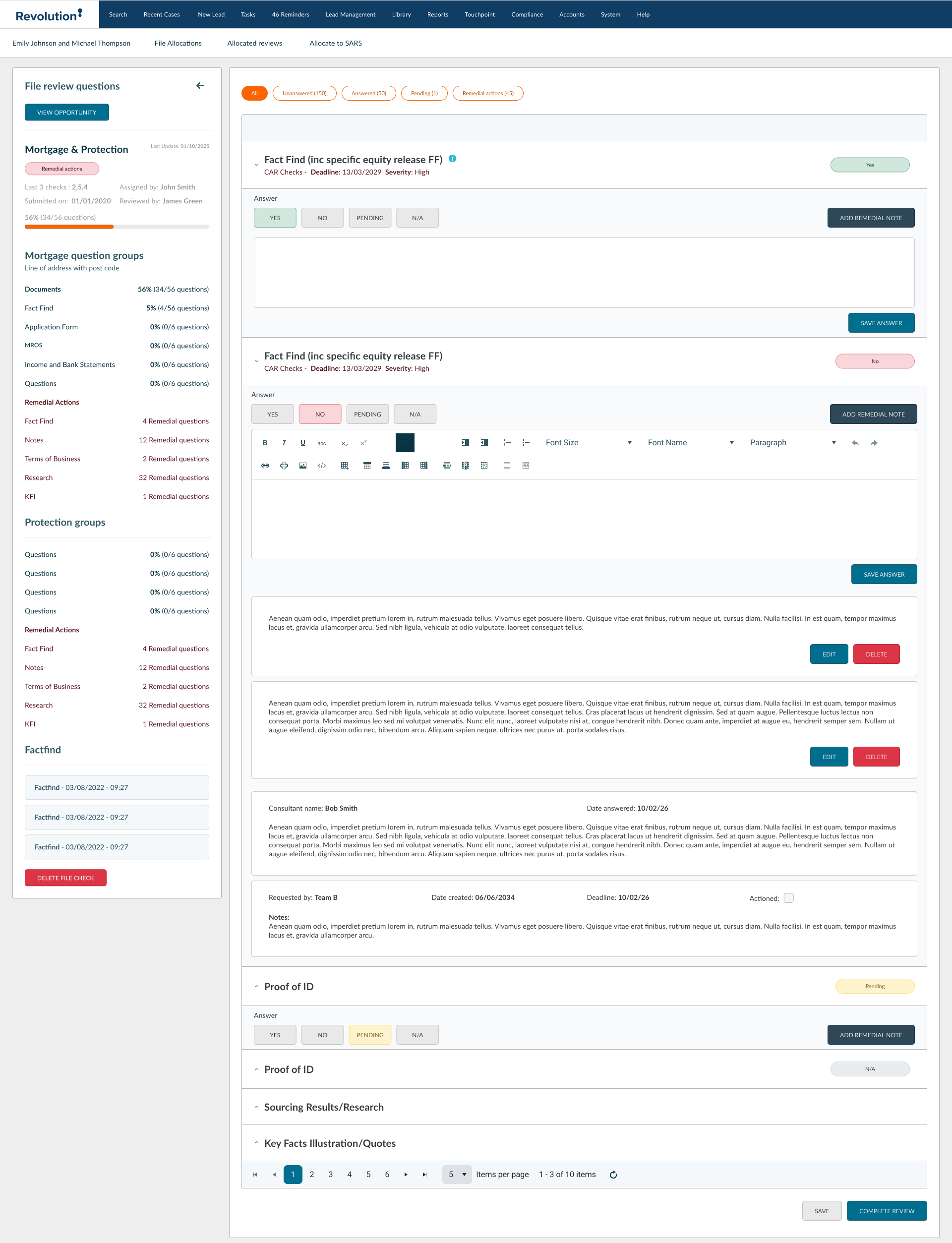

Managing Cognitive Load

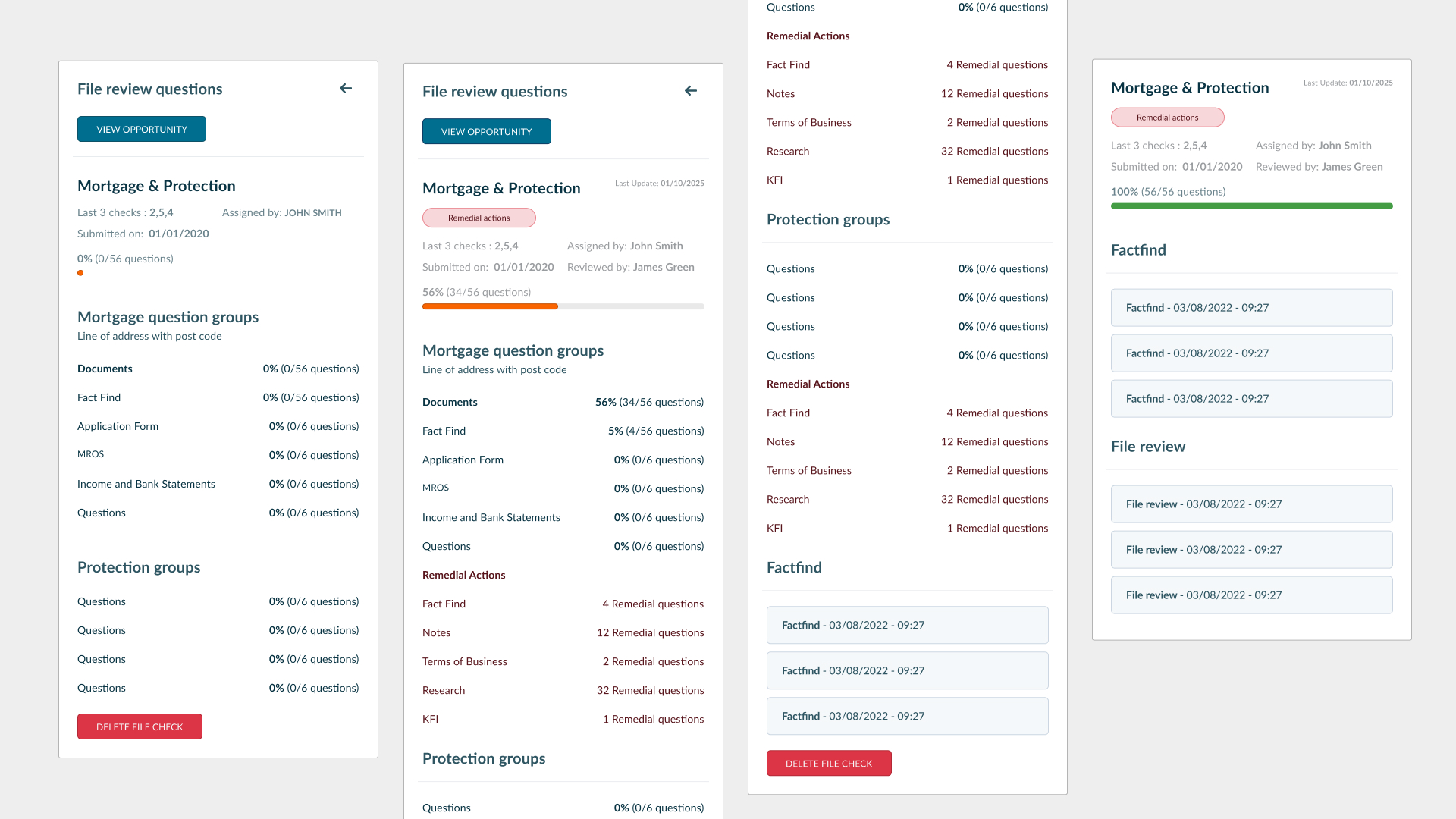

With over 83 potential questions, reviewers were taking up to 2 weeks to complete complex cases. To solve this, I introduced a Collapsible Sidebar Navigation. This split the "Three Main Screens" (Fact Find, Remedial Actions, Outcome) into manageable chunks, allowing users to jump between sections without losing context.

Team Workflows

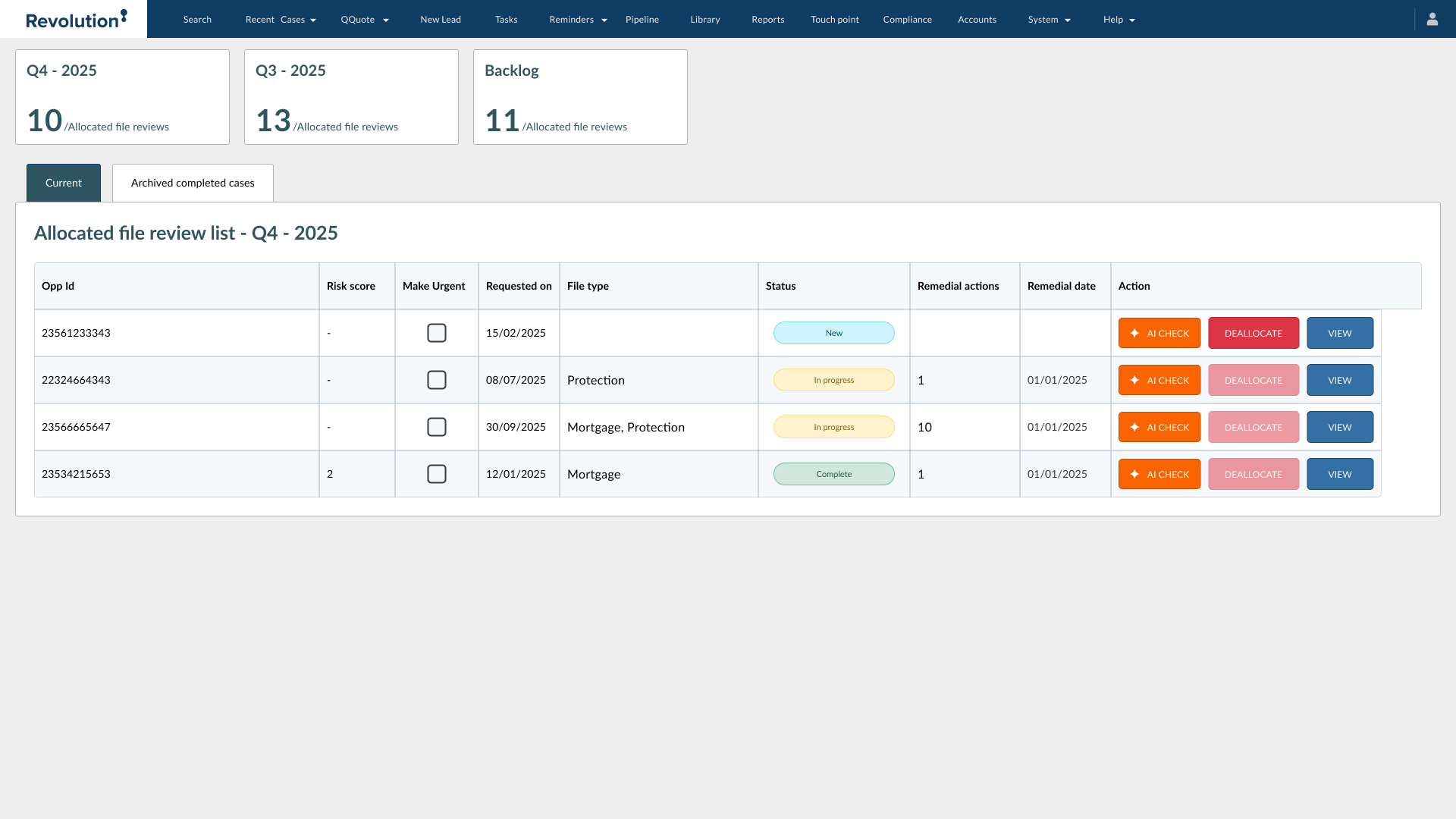

For the review teams, visibility was key. The dashboard needed to clearly separate "Allocated" reviews from the "To-Do" backlog. We used colour-coded status pills to allow Team Leads to spot 'At Risk' files instantly.

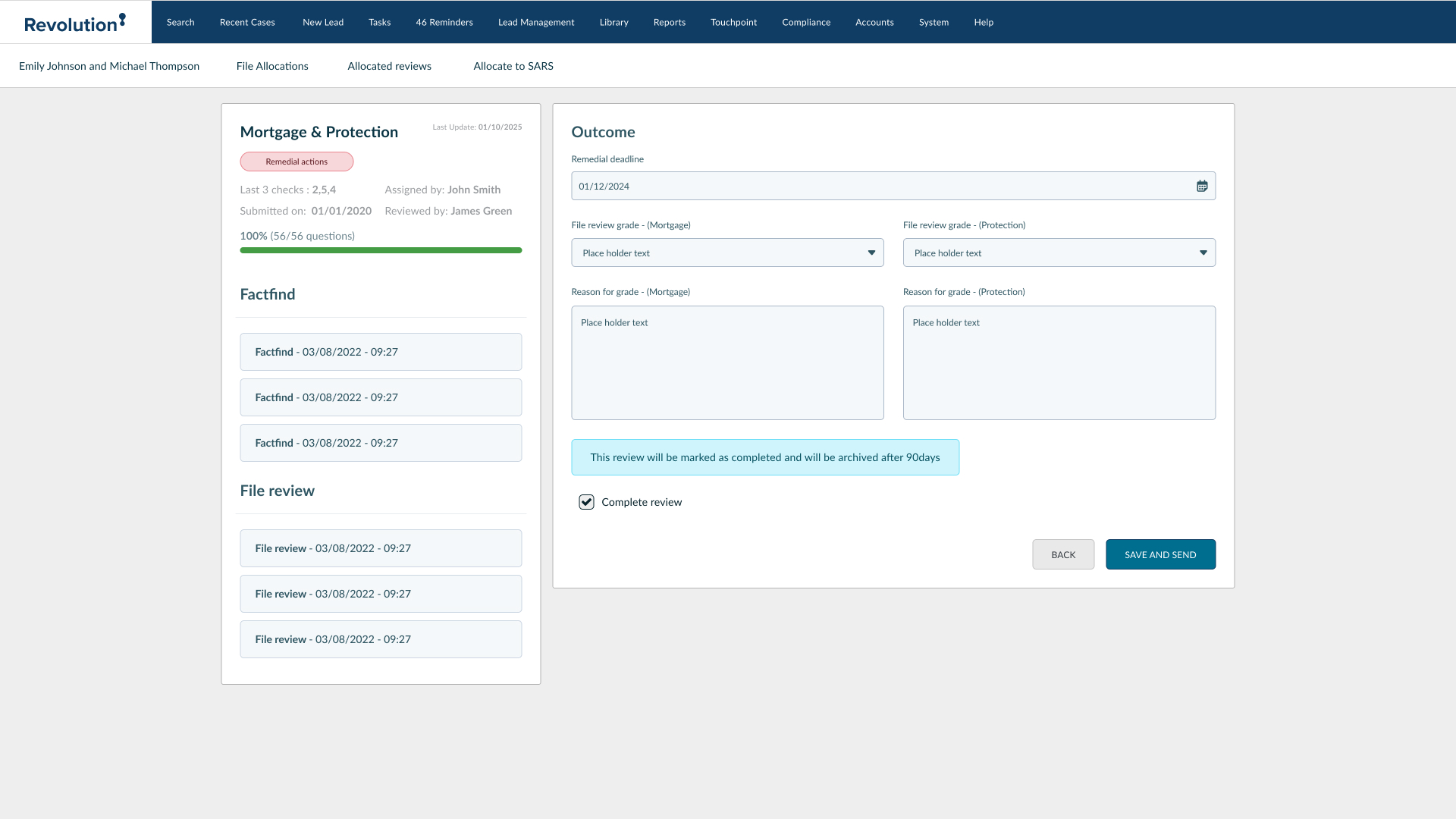

Defining the Outcome

The final step is the "Outcome" screen. We redesigned this to be binary and clear. Instead of ambiguous text fields, we implemented structured data inputs for Remedial Actions, ensuring that any failed review had a specific, trackable reason attached to it.

Scale

160 Daily Reviewers

Complexity

83 Data Points

Efficiency

+40% Speed

Phase 2: Intelligent Automation & Logic

1. The "JMan" AI Integration

The core goal was to digitise the "File Review" process within the Revolution platform, moving away from manual spreadsheets. We integrated an automated AI check that runs directly within the assessor's dashboard.

- → The Workflow: The user clicks the orange "Run AI Check" button. A pop-up asks them to select the specific Policy/Checklist to test (e.g., Mortgage vs. Protection).

- → Status Loop: The button cycles through states (AI Checking → Cancel → Download Report) to manage user expectations during API latency.

The "Sync" Rule

A critical UX challenge was maintaining data integrity across three different screens: the Allocator, the Assessor, and the AI Pop-up.

The Solution: I designed a strict synchronization rule. If a user changes the checklist category in the AI pop-up, it automatically updates the dropdowns on the main dashboard, and vice versa, preventing data mismatches.

Split Scoring Logic

Historically, files had a single "Score" field. We moved to a nuanced 1-7 grading scale (Green/Amber/Red).

New Architecture: I separated scores into distinct "Mortgage" and "Protection" columns. This ensures that a complex case with multiple policy types receives accurate, granular grading rather than a blended average.

UI Strategy: The "Grouped View"

To fit the new AI buttons and split-scoring columns on smaller screens, we couldn't simply add more columns.

Space-Saving Layout

Instead of repeating Assessor names on every row, I implemented a "Grouped View" where cases are nested under a collapsible header (e.g., "▼ John Little").

Abbreviated Headers

We optimised column titles (e.g., changing "Case Review Checklist" to "Checklist") to reclaim valuable horizontal pixel space for the action buttons.